Marcus Hinds, a geospatial consultant, shares the results of his work with using remote sensing and methodologies from environmental GIS to better understand and provide solutions to mediate light pollution in the Greater Toronto Area in Canada.

Nathan Heazlewood in one of his recent blurbs urged us Geomatics practitioners to be proud of the Geospatial profession, in his article “take pride in the Geospatial Profession“. GIS and Geomatics are a large part of many environmental projects, because, let’s face it, environmental projects have to occur in time and space. That space is always located on the surface or beneath the earth, and persons responsible for the progress of the project need to know the specs of the project like what is happening, where it’s happening, why it’s happening and who is doing it. Every event is linked to the project in some way.

I’m no stranger to environmental GIS projects. Many of these (GIS projects) projects cross into other disciplines such as Energy, Finance, and Engineering and probably the most controversial of all, Politics.

Light Pollution Mapping in Toronto, Canada

One example of how interdisciplinary an environmental GIS project can become, is one that I recently worked on; the Light Pollution Initiative with the City of Toronto. The City was looking to reduce its lighting footprint and find ways of informing Greater Toronto Area (GTA) residents, about sources and effects of light pollution. Light pollution in this case is mainly classified, as any form of up-lighting and or over-lighting that emits unwanted light into the night sky, also known as sky glow. SKy-glow has a number of harmful and non-harmful effects, but the most popular has to be when light spreads to suburban and rural areas and drowns the night sky and stars. Environmental Heath Perspective Research has shown that star gazing and night sky observation is on a rapid decline in the younger generation, simply because we can’t see the night sky in the majority of our cities. Another effect is that deciduous trees have delayed adaption to season changes because of prolonged exposure to light. Wildlife like turtles, birds, fish, reptiles, and other insects show decreased reproduction due to higher levels of light in previously dark habitats. I didn’t even mention the increased risk of smog in urban areas, preceding periods of heavy light pollution. In us humans, light pollution has been linked to the cause of sleep deprivation in the short term, melatonin deficiency, increased risk of cancer (breast and prostate), obesity, and raises the probability of early-onset diabetes in the long term.

Because a project like is sensitive to so many variables, like the layout of power grid, culture of the city, socioeconomic classes, and urban design of the city; it was a very multidisciplinary feat, which required tactical thinking. The response needed to be tailored from principles from Urban Planning, Environmental Engineering, Architecture and Ecology. The fact that the end user was a broad, largely non technical audience also had to be factored in. As I got to working, I quickly realized that this is an onion. The more you look at it, the more layer you find. Before I knew, I had to think about Illumination Engineering, Power Generation and Energy Efficiency, due to the hundreds of megawatt hours in electricity being consumed by up-lighting and over-illumination, adding stress to an already stressed set of grid infrastructure. I also had to think about the health care system because, any ailments stemming from light pollution will add casualties to the health care system. I quickly noticed how broad (and valuable) environmental GIS really is.

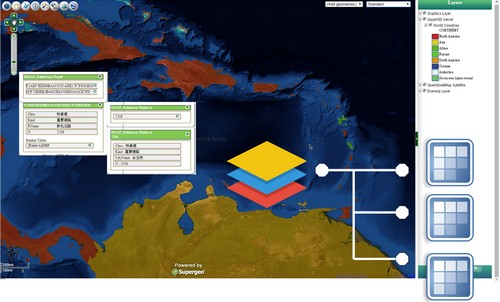

My original thought of the project’s response, was to go about highlighting light pollution hotspots throughout the greater city area and compare it to data coming out the electricity provider. As suspected, the brightest areas on the maps, where the most energy intensive areas of the grid. The real challenge though, was to highlight light pollution at night, when all the base maps available are “of day”, then how do I communicate this? Well just bled the two came to mind, and that I did.

To find the light pollution hotspots, I got a Google base map and overlayed a geo-referenced satellite light pollution image of the city from NASA’s International Space Station (ISS). Areas of bright up lighting and sky glow around the city were obvious to the naked eye; but I wanted to show more. I applied lighting standards from the Illuminating Society of North America (IESNA), which meant that IESNA’s effective lighting series was now involved.

I used RP-8 for street and roadway lighting, RP-6 for sports and recreational lighting, RP-33 for outdoor lighting schemes. RP-2 for mercantile areas and RP-3 for schools and educational facilities. Each standard had prescribed lighting thresholds, which suggest efficient and appropriate light levels that should be used for each application. Each standard also discussed the type and quality of light suitable for each application. Now; only to find how much over the lighting threshold each point of sky glow on my map wa, and use this to estimate energy use figures.

I determined the areas brighter than the lighting threshold, through blending the geo-referenced base map and the NASA light pollution image together in Image J image processing software, and passing that image through filters. Image J is an open source Java based image processing software. The first image filter I used was the Gaussian High Pass filter for image sharpening the image, in order to highlight areas of bright light contrasted against dark areas. Then I applied a Gaussian Low Pass for smoothing the image and highlighting the contrast between bright pixels and dark pixels. Finally I added the nearest neighbor filter in order to generalize individual points of up-lighting, and spread the pixels showing sky glow evenly around each area of up-lighting. This method highlighted individual points in the GTA that were contributing to up-lighting, but I still needed to find the amount of light generated by each point of up-lighting and the value that each point stands above the lighting threshold set out in IESNA’s standards.

Since Image J does not have the capacity to calculate exact threshold, I had to find another open source software package that was easy to use and was Java based as Image J was. My rebuttal was Open Source Computer Vision, better known as Open CV image processing software. I used the blended image output from Image J, input that into Open CV and made some copies of it. This process called simple thresholding was applied in series. The first image was greyscaled in order to assign a value to each pixel in the image; the second image was used to classify pixel values and the third used to set a lower lighting threshold value. These three images were then overlayed pasted onto to each other and were made transparent in order to see the detail on all three images. This led to a pixel value being assigned to each pixel, and being able to determine the value of how much each pixel was over the defined threshold. This order of filtering was suggested by an Open CV technician and delineated light pollution areas around the GTA with high precision. Open CV is very well suited to working with environmental GIS and has a strong point in working with polygons in photo interpretation.

AERIAL IMAGE OF THE GREATER TORONTO AREA (GTA), SHOWING LIGHT POLLUTION HOTSPOTS IN WHITE LIGHT. MAJOR STREETS AND HIGHWAYS CAN CLEARLY BE IDENTIFIED.

In retrospect, I’ve seen a couple photometric surveys of cities in my time, and I must say that the data created from this project is simultaneous with photometric surveys. And the most intriguing part is that it all happened through remote sensing.

The outcome of this survey is ongoing, but there are a number of items in progress:

- The City releasing documents surrounding the use of decorative lighting and its contribution to the skyline, noting that this form of lighting should not only be efficient and sustainable and should comprise LED’s, but should be turned off during migration periods for migratory birds. See Page 60 of Tall Building Design Guidelines (link:http://goo.gl/ddANm0)

- Many condo developers are now turning decorative lighting off at 11pm in the downtown core to facilitate light pollution standards and migratory bird guidelines set out by FLAP on the Flap Website (link:http://www.flap.org/)

- Discussion and literature has been released and in circulation for sometime, evaluating the efficiency of buildings that use glass as the main material in the building facade. Glass facades not only cause the building to be more energy intensive but also pose a hazard to birds, where they usually become disorientated and collide with the building, inflicting serous injuries and death. There have also been many cases of glass falling from these buildings in many Canadian cities. Building scientist Ted Kesik, a prominent building scientist based in Toronto, has estimated that condo pricing and maintenance fees to skyrocket in the next decade, simply because of the use of glass. The Condo Conundrum

- Discussion surrounding implementing guidelines for the GTA to implement full cut off/fully shielded light fixtures for outdoor lighting, as some parts of Ottawa have done. See the Report to the Planning & Environmental Committee submitted by Deputy City Manager Planning, Transit and Environment, City of Ottawa

References: Skyglow/Light Pollution – NASA